前言

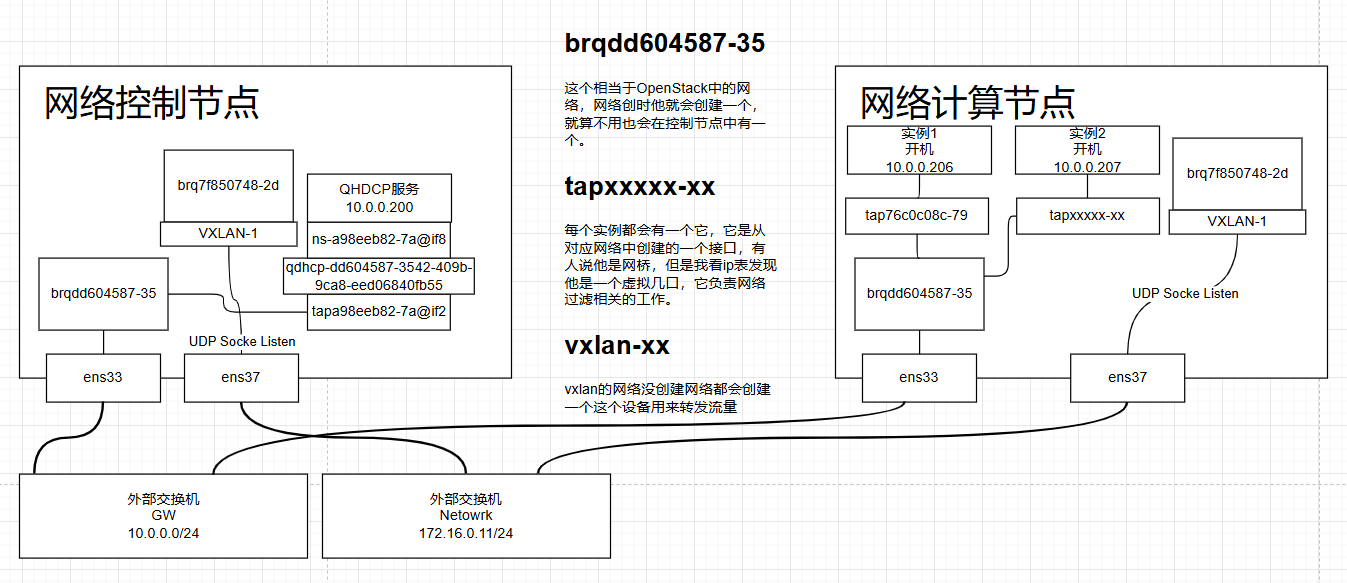

基于组件理解文章,这里单独做一个对于openstack网络组件Neutron的理解,本文只是我自己对于它的理解,因为网上的资料比较有限,本文只能代表我个人理解,有不对的地方欢迎大佬指正。我的环境是Linux Bridge + VXLAN的形式,具体的配置体现可以参考搭建文章的下面内容

官方文档-1: https://docs.openstack.org/neutron/stein/install/controller-install-rdo.html

官方文档-2: https://docs.openstack.org/neutron/stein/install/controller-install-option1-rdo.html

官方文档-3: https://docs.openstack.org/neutron/stein/install/controller-install-option2-rdo.html

linux bridge: https://blog.boychai.xyz/index.php/archives/83/

VXLAN: https://blog.boychai.xyz/index.php/archives/88/

供应商-Provider网络

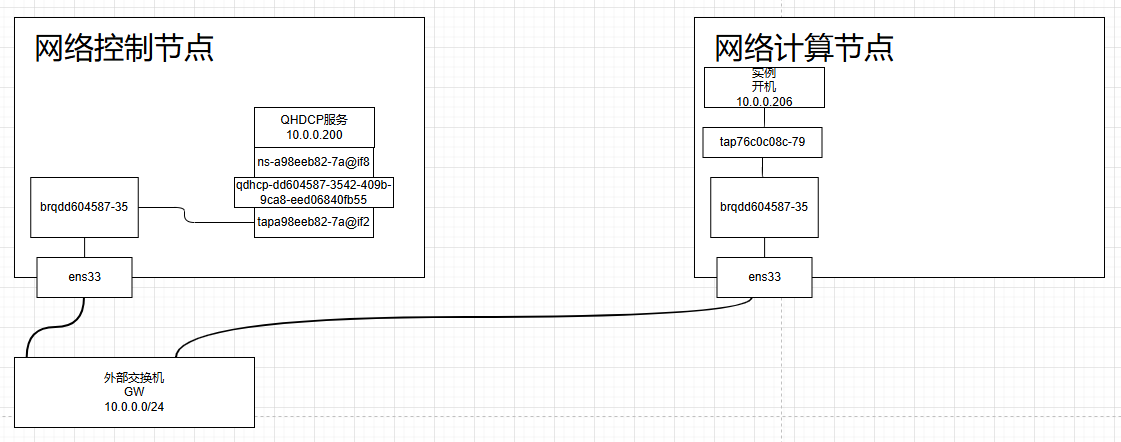

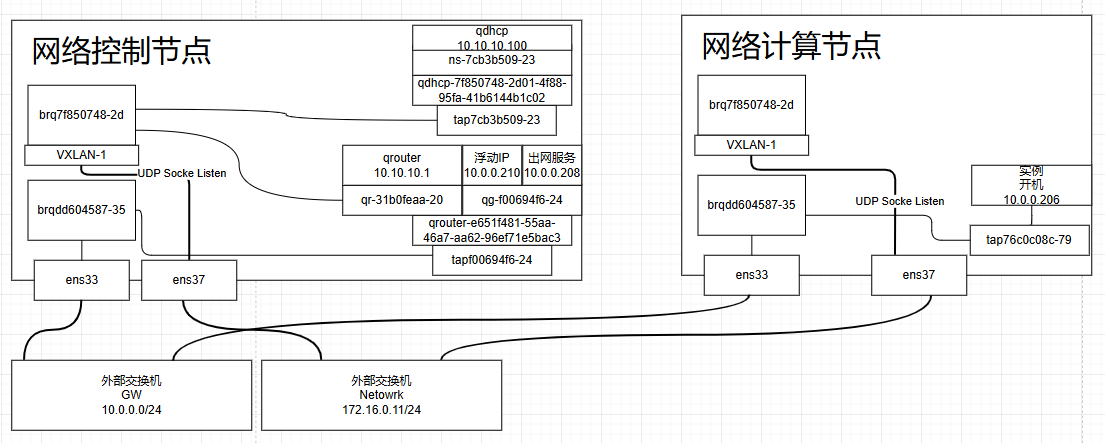

在没有创建Self-service networks网络的的时候,创建一个主机参考下面openstack网络拓扑

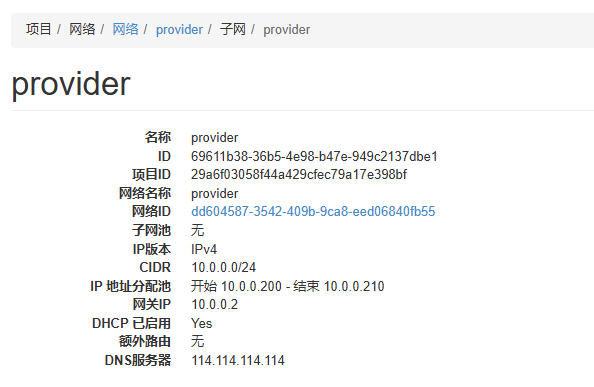

网络相关的信息如下

主机未开机的时候,在网络控制节点的网络情况是这样的

[root@controller ~]# ip link

......

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

8: tapa98eeb82-7a@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 0a:04:25:5a:e2:67 brd ff:ff:ff:ff:ff:ff link-netnsid 2

9: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

[root@controller ~]# brctl show

bridge name bridge id STP enabled interfaces

brqdd604587-35 8000.000c2908b59e no ens33

tapa98eeb82-7a

[root@controller ~]# ip a

......

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

inet6 fe80::ad6b:bdb0:9b14:c54d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

8: tapa98eeb82-7a@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master brqdd604587-35 state UP group default qlen 1000

link/ether 0a:04:25:5a:e2:67 brd ff:ff:ff:ff:ff:ff link-netnsid 2

9: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

inet 10.0.0.11/24 brd 10.0.0.255 scope global brqdd604587-35

valid_lft forever preferred_lft forever

inet6 fe80::d061:3cff:fedd:3f85/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# ip netns

qdhcp-dd604587-3542-409b-9ca8-eed06840fb55 (id: 2)

[root@controller ~]# ip netns exec qdhcp-dd604587-3542-409b-9ca8-eed06840fb55 ip a

...

2: ns-a98eeb82-7a@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:53:6f:70 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.200/24 brd 10.0.0.255 scope global ns-a98eeb82-7a

valid_lft forever preferred_lft forever

inet 169.254.169.254/16 brd 169.254.255.255 scope global ns-a98eeb82-7a

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe53:6f70/64 scope link

valid_lft forever preferred_lft forever在对应实例的计算节点网络是这样的

[root@compute1 ~]# ip link

......

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

6: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

[root@compute1 ~]# brctl show

bridge name bridge id STP enabled interfaces

brqdd604587-35 8000.000c29a8d7e0 no ens33

[root@compute1 ~]# ip a

......

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ad6b:bdb0:9b14:c54d/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::5597:3a9:a4bd:ae3a/64 scope link noprefixroute

valid_lft forever preferred_lft forever

6: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.31/24 brd 10.0.0.255 scope global brqdd604587-35

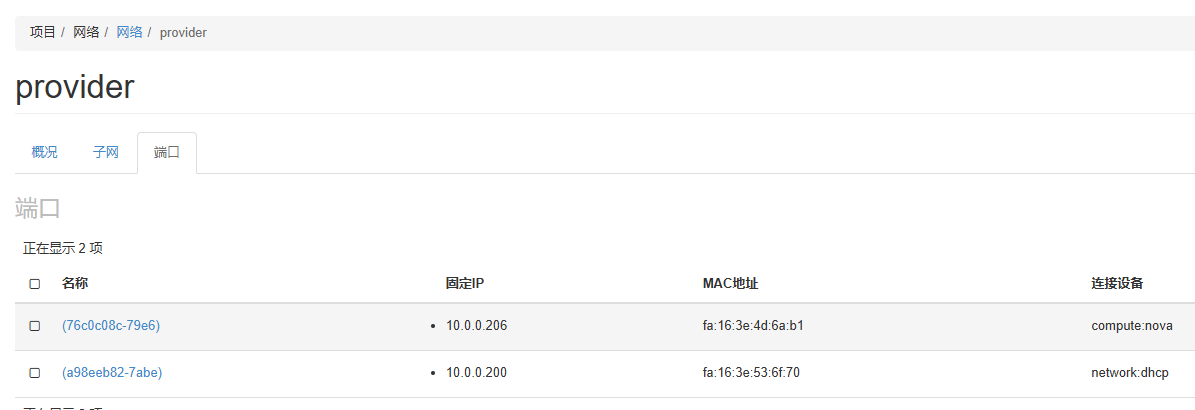

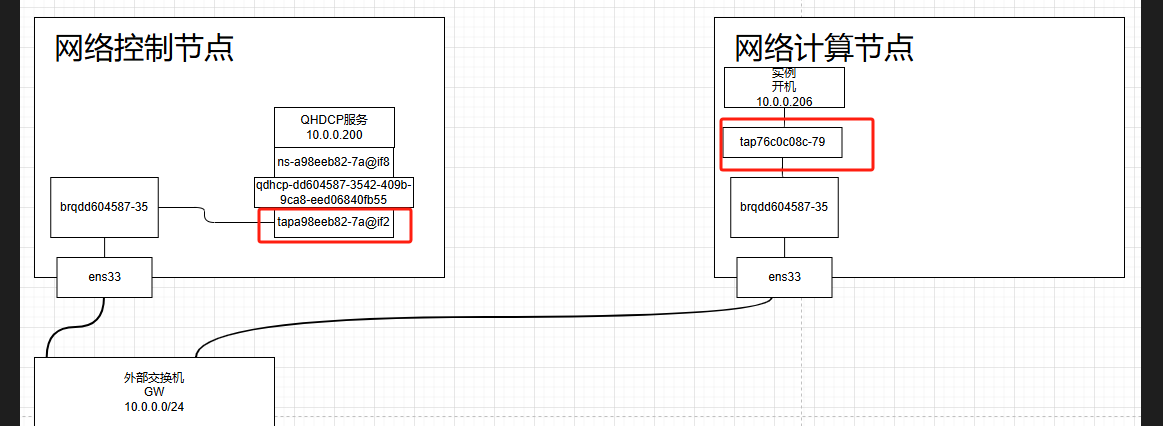

valid_lft forever preferred_lft forever可以看到网络的iddd604587-3542-409b-9ca8-eed06840fb55会在网络控制节点和实例对应计算节点上创建一个网卡,并且取id的前10位作为网卡的名字,即brqdd604587-35,然后就是网络中的端口,可以参考上面网络端口的图片,上面有一个network:dhcp的端口,我感觉这个可以理解为在这个网络上的设备,这个dhcp的端口的名字为a98eeb82-7abe,可以对为网络控制节点中的netns(网络命名空间),里面的一个设备ns-a98eeb82-7a@if8命名格式也是前10位,可以知道这个接口是当前网络中的DHCP服务,其中这个设备通过link-netnsid和@if8的标识可以判断出他是一个虚拟接口(veth pair对),通过@if8可以看出他是从主机的tapa98eeb82-7a网卡接出来的,tapa98eeb82-7a是网桥brqdd604587-35中的接口。然后当前两台机器的网桥brctl show中可以看到brqdd604587-35都是ens33的网桥。当前它们的网络架构如下

当实例开机,他们的网络情况会变会变成下面这样,网络控制节情况如下

[root@controller ~]# ip link

......

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

8: tapa98eeb82-7a@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 0a:04:25:5a:e2:67 brd ff:ff:ff:ff:ff:ff link-netnsid 2

9: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

[root@controller ~]# brctl show

bridge name bridge id STP enabled interfaces

brqdd604587-35 8000.000c2908b59e no ens33

tapa98eeb82-7a

[root@controller ~]# ip a

......

8: tapa98eeb82-7a@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master brqdd604587-35 state UP group default qlen 1000

link/ether 0a:04:25:5a:e2:67 brd ff:ff:ff:ff:ff:ff link-netnsid 2

9: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

inet 10.0.0.11/24 brd 10.0.0.255 scope global brqdd604587-35

valid_lft forever preferred_lft forever

inet6 fe80::d061:3cff:fedd:3f85/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# ip netns

qdhcp-dd604587-3542-409b-9ca8-eed06840fb55 (id: 2)

[root@controller ~]# ip netns exec qdhcp-dd604587-3542-409b-9ca8-eed06840fb55 ip a

......

2: ns-a98eeb82-7a@if8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:53:6f:70 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.200/24 brd 10.0.0.255 scope global ns-a98eeb82-7a

valid_lft forever preferred_lft forever

inet 169.254.169.254/16 brd 169.254.255.255 scope global ns-a98eeb82-7a

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe53:6f70/64 scope link

valid_lft forever preferred_lft forever在对应实例的计算节点中他的网络变成了这样

[root@compute1 ~]# ip link

......

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

6: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

8: tap76c0c08c-79: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UNKNOWN mode DEFAULT group default qlen 1000

link/ether fe:16:3e:4d:6a:b1 brd ff:ff:ff:ff:ff:ff

[root@compute1 ~]# brctl show

bridge name bridge id STP enabled interfaces

brqdd604587-35 8000.000c29a8d7e0 no ens33

tap76c0c08c-79

[root@compute1 ~]# ip a

......

6: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.31/24 brd 10.0.0.255 scope global brqdd604587-35

valid_lft forever preferred_lft forever

8: tap76c0c08c-79: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UNKNOWN group default qlen 1000

link/ether fe:16:3e:4d:6a:b1 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc16:3eff:fe4d:6ab1/64 scope link

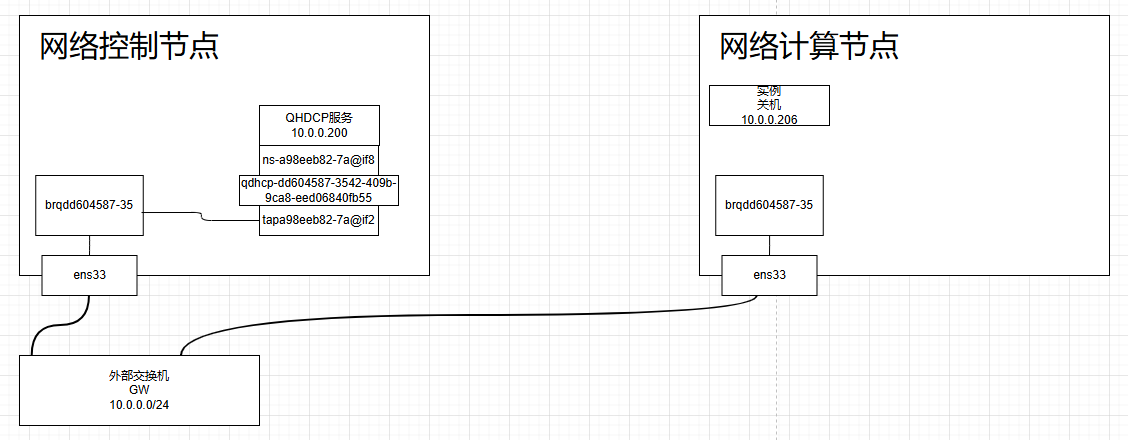

valid_lft forever preferred_lft forever改变主要是在计算节点,在计算节点中他的网桥关联了一个新的接口tap76c0c08c-79,这个接口是怎么来的呢,可以参考虚机的配置

[root@compute1 ~]# virsh list

Id Name State

----------------------------------------------------

3 instance-00000010 running

[root@compute1 ~]# cd /etc/libvirt/qemu/

[root@compute1 qemu]# ls

instance-00000010.xml networks在instance-00000010.xml中,网络接口相关配置如下

<interface type='bridge'>

<mac address='fa:16:3e:4d:6a:b1'/>

<source bridge='brqdd604587-35'/>

<target dev='tap76c0c08c-79'/>

<model type='virtio'/>

<mtu size='1500'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>它基于brqdd604587-35做了一个桥接的虚拟接口到tap76c0c08c-79提供给虚机用。现在的网络架构如下

从组件理解中可以知道,当前的这个供应商网络provider在实际通信中只是做了一层网络映射,映射到实际网络中,我们这里其实可以理解为虚机是直连在这个外部交换机上的,出现问题可以参考一下思考总结里的实验。

自助式-Vxlan网络

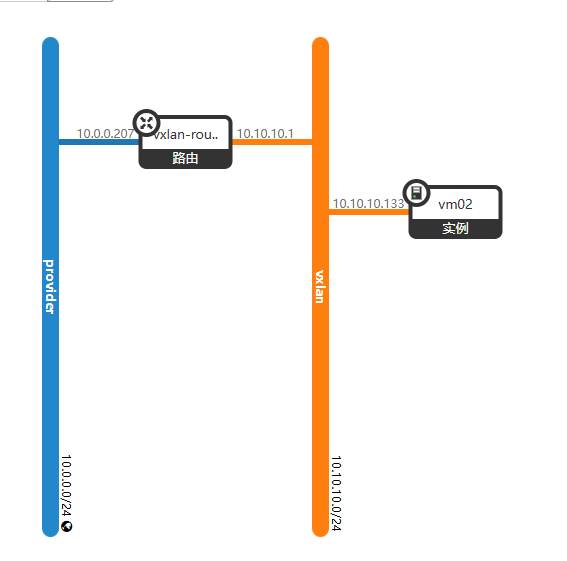

Self-service networks的VXLAN网络,网络拓扑拓扑如下

网络相关配置如下

开启主机去看网络信息,在控制节点的网络信息如下

[root@controller ~]# ip link

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

4: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:08:b5:b2 brd ff:ff:ff:ff:ff:ff

7: tap7cb3b509-23@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UP mode DEFAULT group default qlen 1000

link/ether 9a:4f:c8:45:64:e7 brd ff:ff:ff:ff:ff:ff link-netnsid 1

8: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UNKNOWN mode DEFAULT

group default qlen 1000

link/ether 0a:b6:1f:77:c4:1b brd ff:ff:ff:ff:ff:ff

9: brq7f850748-2d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 0a:b6:1f:77:c4:1b brd ff:ff:ff:ff:ff:ff

10: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen

1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

14: tapf00694f6-24@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 5a:b6:d1:fe:08:64 brd ff:ff:ff:ff:ff:ff link-netnsid 0

15: tap31b0feaa-20@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UP mode DEFAULT group default qlen 1000

link/ether 3e:f5:9d:44:57:29 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@controller ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

inet6 fe80::ad6b:bdb0:9b14:c54d/64 scope link noprefixroute

valid_lft forever preferred_lft forever

4: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:08:b5:b2 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.11/24 brd 172.16.0.255 scope global noprefixroute ens37

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe08:b5b2/64 scope link

valid_lft forever preferred_lft forever

7: tap7cb3b509-23@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UP group default qlen 1000

link/ether 9a:4f:c8:45:64:e7 brd ff:ff:ff:ff:ff:ff link-netnsid 1

8: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UNKNOWN group default qlen 1000

link/ether 0a:b6:1f:77:c4:1b brd ff:ff:ff:ff:ff:ff

9: brq7f850748-2d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 0a:b6:1f:77:c4:1b brd ff:ff:ff:ff:ff:ff

inet6 fe80::44b9:8bff:feee:1639/64 scope link

valid_lft forever preferred_lft forever

10: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:08:b5:9e brd ff:ff:ff:ff:ff:ff

inet 10.0.0.11/24 brd 10.0.0.255 scope global brqdd604587-35

valid_lft forever preferred_lft forever

inet6 fe80::2073:d5ff:fedc:5d7/64 scope link

valid_lft forever preferred_lft forever

14: tapf00694f6-24@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master brqdd604587-35 state UP group

default qlen 1000

link/ether 5a:b6:d1:fe:08:64 brd ff:ff:ff:ff:ff:ff link-netnsid 0

15: tap31b0feaa-20@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UP group

default qlen 1000

link/ether 3e:f5:9d:44:57:29 brd ff:ff:ff:ff:ff:ff link-netnsid 0

[root@controller ~]# ip netns

qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3 (id: 0)

qdhcp-7f850748-2d01-4f88-95fa-41b6144b1c02 (id: 1)

[root@controller ~]# ip netns exec qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3 ip a

2: qg-f00694f6-24@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:6a:e4:80 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.208/24 brd 10.0.0.255 scope global qg-f00694f6-24

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe6a:e480/64 scope link

valid_lft forever preferred_lft forever

3: qr-31b0feaa-20@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:3d:18:d0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.1/24 brd 10.10.10.255 scope global qr-31b0feaa-20

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe3d:18d0/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# ip netns exec qdhcp-7f850748-2d01-4f88-95fa-41b6144b1c02 ip a

2: ns-7cb3b509-23@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:85:9c:4c brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.100/24 brd 10.10.10.255 scope global ns-7cb3b509-23

valid_lft forever preferred_lft forever

inet 169.254.169.254/16 brd 169.254.255.255 scope global ns-7cb3b509-23

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe85:9c4c/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# brctl show

bridge name bridge id STP enabled interfaces

brq7f850748-2d 8000.0ab61f77c41b no tap31b0feaa-20

tap7cb3b509-23

vxlan-1

brqdd604587-35 8000.000c2908b59e no ens33

tapf00694f6-24由于内容较多,这里就先把控制节点的结构分析一下先看网桥,他有两个网桥,它与子网的ID是对的上号的,具体可以看看上面的截图,vxlan子网id为7f850748-2d01-4f88-95fa-41b6144b1c02这里的网桥名字就是brq7f850748-2dbrq+子网id的前10位,然后我们先看一下brqdd604587-35,这个网络是外部provider网络,他是ens33的网桥,他有一个接口tapf00694f6-24,我们再看一下这个接口,发现在qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3命名空间中一个连接的接口qg-f00694f6-24@if14他的ip是10.0.0.208这里估计是一个出网的IP,他是在provider子网端口中也有记录,具体可以去集群中查看,然后再看一下vxlan-1的网桥brq7f850748-2d,上面除了vxlan-1的接口,还有两个虚拟接口分别是tap31b0feaa-20和tap7cb3b509-23,它们的对端可以在命名空间中找到,tap31b0feaa-20对端在qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3命名空间中,对应的接口名字是qr-31b0feaa-20,他的ip是10.10.10.1,这个ip可以得知是在当前子网的网关,在集群中可以得知的ip,这个命名空间中还有一个接口,它的对端刚好是provider网络的网桥中的接口,我们继续看vxlan-1网络的的个虚拟接口tap7cb3b509-23,他的对端是qdhcp-7f850748-2d01-4f88-95fa-41b6144b1c02命名空间中的ns-7cb3b509-23接口用作DHCP。

计算节点的网络信息如下

[root@compute1 ~]# ip link

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

4: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:f4 brd ff:ff:ff:ff:ff:ff

6: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

7: brq7f850748-2d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 6e:e5:c4:4f:34:c9 brd ff:ff:ff:ff:ff:ff

8: tap26500e0a-b5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast master brq7f850748-2d state UNKNOWN mode DEFAULT group default qlen 1000

link/ether fe:16:3e:68:60:74 brd ff:ff:ff:ff:ff:ff

10: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UNKNOWN mode DEFAULT group default qlen 1000

link/ether 6e:e5:c4:4f:34:c9 brd ff:ff:ff:ff:ff:ff

[root@compute1 ~]# ip a

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master brqdd604587-35 state UP group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

inet6 fe80::ad6b:bdb0:9b14:c54d/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::5597:3a9:a4bd:ae3a/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::6f88:d473:e534:aaf9/64 scope link tentative noprefixroute dadfailed

valid_lft forever preferred_lft forever

4: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:a8:d7:f4 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.31/24 brd 172.16.0.255 scope global noprefixroute ens37

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fea8:d7f4/64 scope link

valid_lft forever preferred_lft forever

6: brqdd604587-35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:0c:29:a8:d7:e0 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.31/24 brd 10.0.0.255 scope global brqdd604587-35

valid_lft forever preferred_lft forever

7: brq7f850748-2d: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether 6e:e5:c4:4f:34:c9 brd ff:ff:ff:ff:ff:ff

8: tap26500e0a-b5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast master brq7f850748-2d state UNKNOWN group default qlen 1000

link/ether fe:16:3e:68:60:74 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc16:3eff:fe68:6074/64 scope link

valid_lft forever preferred_lft forever

10: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UNKNOWN group default qlen 1000

link/ether 6e:e5:c4:4f:34:c9 brd ff:ff:ff:ff:ff:ff

[root@compute1 ~]# brctl show

bridge name bridge id STP enabled interfaces

brq7f850748-2d 8000.6ee5c44f34c9 no tap26500e0a-b5

vxlan-1

brqdd604587-35 8000.000c29a8d7e0 no ens33先看一下网桥,brqdd604587-35是provider网络的一个网桥,这里不多讲,在这个节点并没有使用到他,看一下另外一个网桥brq7f850748-2d,它是vxlan-1设备的网桥,另一个接口tap26500e0a-b5连接在实例中。

分析完上面的网络,这里再讲一下vxlan-1这个设备,他是Neutron负责管理的,就以现在的网络拓扑为例,他如果要出外网就需要去找10.10.10.1这个网关,他在控制节点上,他是如何找到它的?而且当前的网络信息,没有设备连接到ens37上,参考上面的引用,再搭建的过程中,这个网络是用来做vxlan的接口,他为啥是一个单独的接口没有任何的关联?这里就要说到vxlan了它们的通信是采用vxlan的方式,当前的拓扑vxlan-1是集群子网brq7f850748-2d网桥的最外层,网桥是桥接的它,在配置他的过程中还有一段配置如下

[vxlan]

enable_vxlan = true

local_ip = 172.16.0.31

l2_population = true这是在计算节点的配置,这个local_ip是用来封装vxlan的,它定义了vxlan具体使用的网卡,这个ip必须是当前主机的一个ip,而且必须要和对端vxlan设备相通,具体的跨节点数据包发过来之后,他会在数据包的基础上,封装一层,原地址就写这个local_ip,对端的ip则会写FDB表对应的IP,

这个对应表是通过L2 Population去发现,也有其他实现的方法(组播形式),配置体现也在上面(vxlan配置),通过命令bridge fdb show dev vxlan-1可以拿到学习到的FDB表,控制节点的内容如下

[root@controller ~]# bridge fdb show dev vxlan-1

0a:b6:1f:77:c4:1b vlan 1 master brq7f850748-2d permanent

0a:b6:1f:77:c4:1b master brq7f850748-2d permanent

00:00:00:00:00:00 dst 172.16.0.31 self permanent

fa:16:3e:68:60:74 dst 172.16.0.31 self permanent计算节点的内容如下

[root@compute1 ~]# bridge fdb show dev vxlan-1

6e:e5:c4:4f:34:c9 vlan 1 master brq7f850748-2d permanent

6e:e5:c4:4f:34:c9 master brq7f850748-2d permanent

00:00:00:00:00:00 dst 172.16.0.11 self permanent

fa:16:3e:85:9c:4c dst 172.16.0.11 self permanent

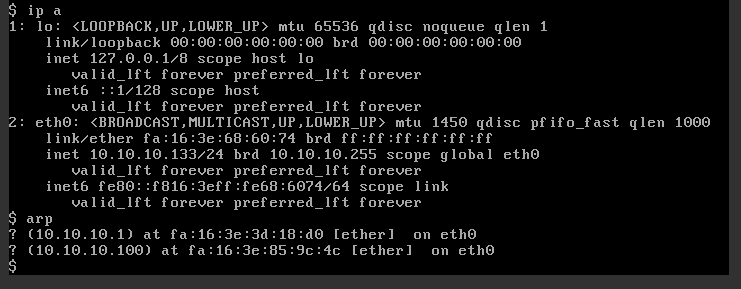

fa:16:3e:3d:18:d0 dst 172.16.0.11 self permanent这里的FDB表内容是,对方mac地址+vxlan设备地址,这些mac具体对应的ip可以在对应的对端网络通过查看arp表获取,例如在控制节点的对应网络中,即qdhcp-7f850748-2d01-4f88-95fa-41b6144b1c02命名空间中的arp表如下

[root@controller ~]# ip netns exec qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3 ip a

......

3: qr-31b0feaa-20@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:3d:18:d0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.1/24 brd 10.10.10.255 scope global qr-31b0feaa-20

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe3d:18d0/64 scope link

valid_lft forever preferred_lft forever

[root@controller ~]# ip netns exec qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3 arp

Address HWtype HWaddress Flags Mask Iface

10.10.10.133 ether fa:16:3e:68:60:74 C qr-31b0feaa-20

gateway ether 00:50:56:ea:8e:2f C qg-f00694f6-24在实例中的对应表如下

控制节点的ip即当前vxlan网络的网关10.10.10.1mac地址是fa:16:3e:3d:18:d0,实例的ip是10.10.10.133mac地址是fa:16:3e:68:60:74,在对方节点学习到的arp也是如此,当发包的时候这些信息也都会存储在数据包中(不仅仅是当前网络所有的网络都是这样的具体参考计算机网络学科),vxlan设备会拿到这些信息去查询FDB表,例如在计算点fa:16:3e:3d:18:d0这个mac地址对应的ip是172.16.0.11那么vxlan设备就会在这个数据包的基础上套一层源地址、目的地址、VNI标识等其他信息,这里不细说封装,源地址即linuxbridge_agent配置中的local_ip即172.16.0.31,对应的也会从这个地址的网卡发包,目的地址就是刚才查询到的FDB的地址,即172.16.0.11,然后VNI标识是用来区分网络的,因为所有的数据包都去走这个vxlan的udp端口,具体区分网络的方式就是通过这个VNI标识,封装好相关数据之后会发给对端,具体的对端端口可以通过下面命令获取

[root@controller ~]# ip -d link show vxlan-1

8: vxlan-1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master brq7f850748-2d state UNKNOWN mode DEFAULT

group default qlen 1000

link/ether 0a:b6:1f:77:c4:1b brd ff:ff:ff:ff:ff:ff promiscuity 1

vxlan id 1 dev ens37 srcport 0 0 dstport 8472 ageing 300 noudpcsum noudp6zerocsumtx noudp6zerocsumrx

bridge_slave state forwarding priority 32 cost 100 hairpin off guard off root_block off fastleave off learning on

flood on port_id 0x8001 port_no 0x1 designated_port 32769 designated_cost 0 designated_bridge 8000.a:b6:1f:77:c4:1b designated_root 8000.a:b6:1f:77:c4:1b hold_timer 0.00 message_age_timer 0.00 forward_delay_timer 0.00 topology_change_ack 0 config_pending 0 proxy_arp off proxy_arp_wifi off mcast_router 1 mcast_fast_leave off mcast_flood on addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

[root@controller ~]# ss -unl|grep 8472

UNCONN 0 0 *:8472 *:*所有有vxlan设备的主机应该都是差不多的,vxlan的标准端口是4789,他这里指定的是8472,这个端口好像是思科的标准,这里的端口是内核负责监听的,所以这里你是不可以通过进程的形式找到他的监听程序的,他拿到数据就会丢到相应的VXLAN设备中这个设备会把这个包的最外层也就是上一个VXLAN设备给封装的相关内容剔除然后进行转发原本的地址,这样就实现了通信。

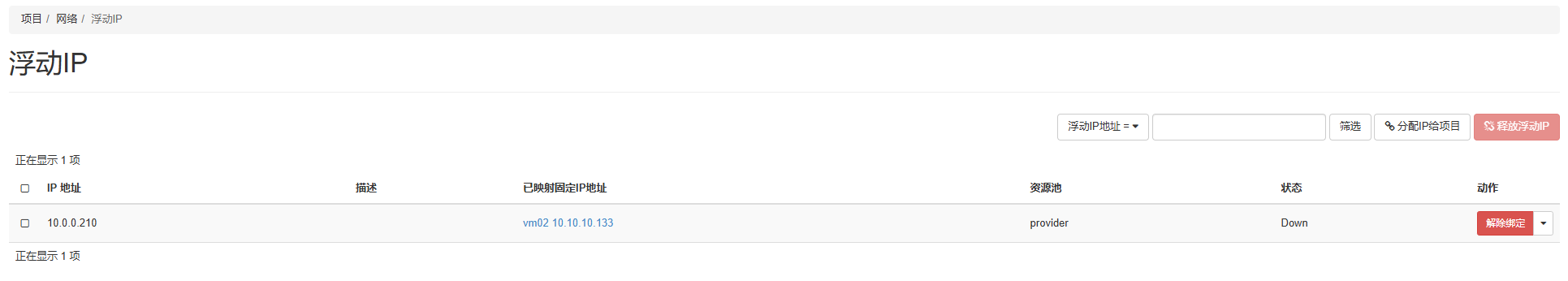

再说一下浮动IP,这里我创建一个浮动IP给当前实例绑定上,如下图

绑定之后发现计算节点的网络信息并没有该边而是在计算节在qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3命名空间中的出网接口增加了一个IP,配置如下

[root@controller ~]# ip netns exec qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3 ip a

2: qg-f00694f6-24@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:6a:e4:80 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.0.0.208/24 brd 10.0.0.255 scope global qg-f00694f6-24

valid_lft forever preferred_lft forever

inet 10.0.0.210/32 brd 10.0.0.210 scope global qg-f00694f6-24

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe6a:e480/64 scope link

valid_lft forever preferred_lft forever

3: qr-31b0feaa-20@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:3d:18:d0 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.1/24 brd 10.10.10.255 scope global qr-31b0feaa-20

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe3d:18d0/64 scope link

valid_lft forever preferred_lft forever对应的路由转发相关的规则会在iptables体现,具体参考下面命令

[root@controller ~]# ip netns exec qrouter-e651f481-55aa-46a7-aa62-96ef71e5bac3 iptables -t nat -L -n -v|grep 210

0 0 DNAT all -- * * 0.0.0.0/0 10.0.0.210 to:10.10.10.133

0 0 DNAT all -- * * 0.0.0.0/0 10.0.0.210 to:10.10.10.133

0 0 SNAT all -- * * 10.10.10.133 0.0.0.0/0 to:10.0.0.210当前网络的结构关系如下图

思考总结

在研究openstack网络Neutron组件的过程中,心里好多疑问,我把它总结到这里,问题以及验证。

在FLAT网络中内部和外部的DHCP为什么可以做到互不干预?

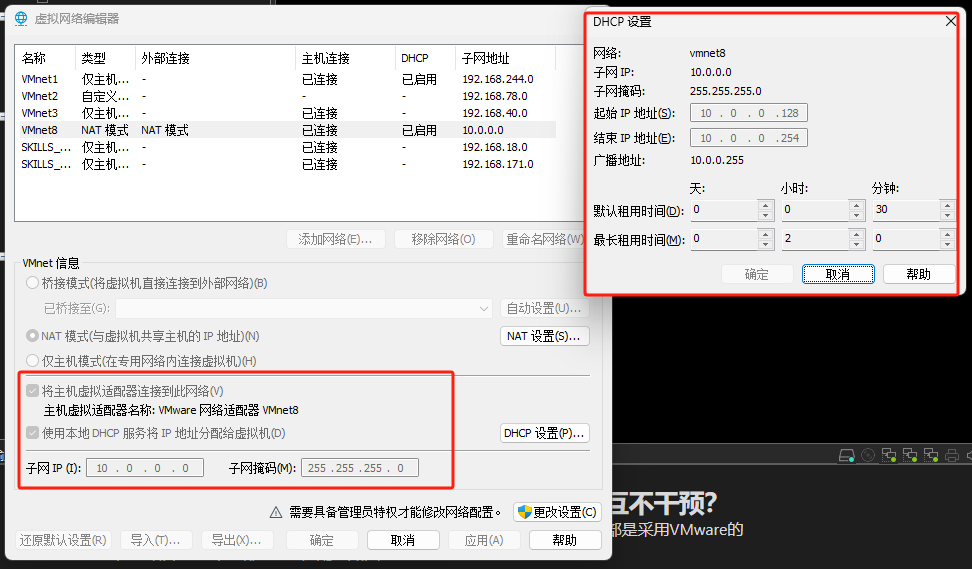

这个问题其实并不严谨,我当时是上面供应商-Provider网络的结构,我外部是采用VMware的NAT网络,我给开了一个内部DHCP,配置如图

然而我FLAT的子网中也配置了一个DHCP-Agent,如下图

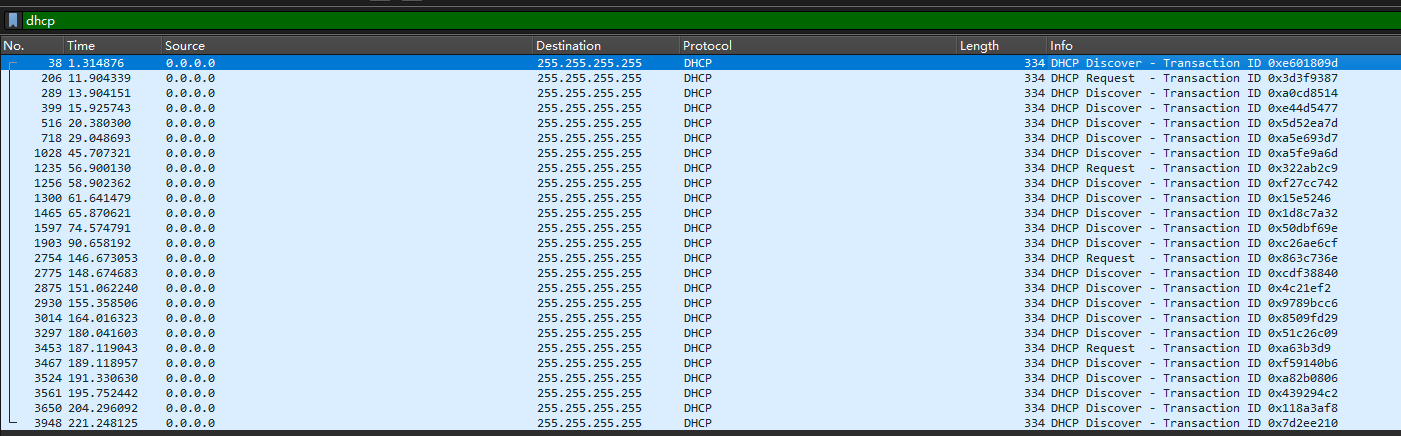

我发现它们两个DHCP互不干预,当子网的DHCP关闭,对应子网内的实例是无法通过外部DHCP获取IP的,然后外部的DHCP关掉,内部的打开,外部的对应网络下的虚机也是无法通过内部DHCP获取到IP的。各种文档解释FLAT网络都是相当于直连,但是为什么当前的的环境下DHCP不互通?而且我内部甚至可以使用外部的网关进行上网?我查了好多文章都没发现有人说这个的,我就进入虚机自己分析了,我最开始怀疑他做了内部隔离,就是两个广播域,他通过软件的方式去做映射,我通过把内部子网DHCP关掉,这样内部的实例就会去播报arp宣告,我发现不管是那台主机去抓包都会拿到他广播的数据包,并且外部的DHCP是回复了他的数据包的,这就很奇怪,如下图

首先是第一个红框的内容,实例发送了DHCP广播Discover包,然而10.0.0.254明明给他了一个ip10.0.0.254但是后续就没了,按照正常顺序他应该回复一个DHCP Request的一个确认数据包,但是他这里没后续,并且继续去广播Discover包,而且他依旧回复了,给他了一个10.0.0.161,中途外部的DHCP一直广播ARP谁是这个他分配的这个ip一直也没有得到回复,后续一直再重复这个过程,这里可以判断出它们确实都在一个广播域中,只是客户端一直没去使用外部DHCP,在openstack网络结构中这一层的网桥(我这里叫做第二层网桥),如图

他有一个数据包过滤的作用,这里去计算节点中查看iptables过滤规则,发现了端倪,规则如下

[root@compute1 ~]# iptables-save | grep -E '67|68'

:INPUT ACCEPT [127064:177468678]

-A neutron-linuxbri-i76c0c08c-7 -d 10.0.0.206/32 -p udp -m udp --sport 67 --dport 68 -j RETURN

-A neutron-linuxbri-i76c0c08c-7 -d 255.255.255.255/32 -p udp -m udp --sport 67 --dport 68 -j RETURN

-A neutron-linuxbri-o76c0c08c-7 -s 0.0.0.0/32 -d 255.255.255.255/32 -p udp -m udp --sport 68 --dport 67 -m comment --comment "Allow DHCP client traffic." -j RETURN

-A neutron-linuxbri-o76c0c08c-7 -p udp -m udp --sport 68 --dport 67 -m comment --comment "Allow DHCP client traffic."

-j RETURN

-A neutron-linuxbri-o76c0c08c-7 -p udp -m udp --sport 67 --dport 68 -m comment --comment "Prevent DHCP Spoofing by VM." -j DROP比较重要的是这两条规则,第一条规则如下

-A neutron-linuxbri-i76c0c08c-7 -d 10.0.0.206/32 -p udp -m udp --sport 67 --dport 68 -j RETURN第一条规则是当DHCP分配的地址是10.0.0.206的话则把这条丢到原链上的,即原本的INPUT、OUTPUT中只要是放行的则这条就是通过,去看了看原链上的规则就是ACCEPT,规则如下

[root@compute1 ~]# iptables -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

neutron-linuxbri-INPUT all -- anywhere anywhere

Chain FORWARD (policy ACCEPT)

target prot opt source destination

neutron-filter-top all -- anywhere anywhere

neutron-linuxbri-FORWARD all -- anywhere anywhere

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

neutron-filter-top all -- anywhere anywhere

neutron-linuxbri-OUTPUT all -- anywhere anywhere

......那就是说他这个实例允许收到这个ip的DHCP回包。再去看第二条,规则如下

-A neutron-linuxbri-o76c0c08c-7 -p udp -m udp --sport 67 --dport 68 -m comment --comment "Prevent DHCP Spoofing by VM." -j DROPiptables的规则是从上到下去匹配的,如果不符合上面206的规则那么这条将会丢弃所有DHCP的Offer包,就导致不管子网内的主机有没有配置DHCP,都没办法使用外部的DHCP。好,现在第一个疑问解决了,再看第二个为什么内部DHCP开启没办法给外部主机分配DHCP,我直接把VMware的DHCP关掉,关掉之后开一台这个网络的虚机,让他去通过DHCP获取IP,然后我在控制节点对应QDHCP的命名空间中去抓QDHCP的网卡,当外部主机区获取IP的时候抓到了下面的数据,如图

发现他一直在发包DHCP的Discover包,一直在广播域中去找DHCP拿ip,但是QDHCP并没有回应他,到这里我就已经明白了,Neutron他自己维护的DHCP只会对集群自己的管理的实例进行服务,实现的方式估计也是通过MAC地址实现过滤,当符合他ip的MAC则就分配这个ip,如果不符合那么就不作为,具体可以参考他QDHCP实现软件的相关配置,我默认配置的DHCP-Agent实现方式如下

[root@controller ~]# cat /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true他的实现是采用Dnsmasq软件,我们去看一下他的启动参数,通过下面的命令拿到

[root@controller ~]# ip netns exec qdhcp-dd604587-3542-409b-9ca8-eed06840fb55 ps aux |grep dnsmasq

nobody 7584 0.0 0.0 56128 1068 ? S 17:22 0:00 dnsmasq --no-hosts --no-resolv --pid-file=/var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/pid --dhcp-hostsfile=/var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/host --addn-hosts=/var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/addn_hosts --dhcp-optsfile=/var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/opts --dhcp-leasefile=/var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/leases --dhcp-match=set:ipxe,175 --dhcp-userclass=set:ipxe6,iPXE --local-service --bind-dynamic --dhcp-range=set:subnet-69611b38-36b5-4e98-b47e-949c2137dbe1,10.0.0.0,static,255.255.255.0,86400s --dhcp-option-force=option:mtu,1500 --dhcp-lease-max=256 --conf-file= --domain=openstacklocal具体的这些参数可以自己GPT,我这里说一下软件是怎么实现的这个功能,他的行参中有一项--dhcp-hostsfile=/var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/host,这个参数的作用是加载一个 预定义的 MAC-IP 映射文件,仅响应文件中列出的 MAC 地址的 DHCP 请求。这个文件是由neutron去管理,内容可以查看一下

[root@controller ~]# cat /var/lib/neutron/dhcp/dd604587-3542-409b-9ca8-eed06840fb55/host

fa:16:3e:4d:6a:b1,host-10-0-0-206.openstacklocal,10.0.0.206

fa:16:3e:cb:5e:78,host-10-0-0-200.openstacklocal,10.0.0.200这里就存着他自己和我的实例的对应表。到这里这个问题就清晰了,互不干预的原因是因为它内部有过滤规则和相应条件,内部只会把内部的DHCP包交给实例,否则会过滤掉,并且内部的DHCP不会去响应外部机器的Discover包。

两层网桥网桥和OpenStack中的概念对应关系

具体参考下图,具体的实验可以通过在同网络下一个子网一个实例,一个一个去增加实例和子网网络等操作,去节点中查看他们的网络ip网卡网桥对应表。

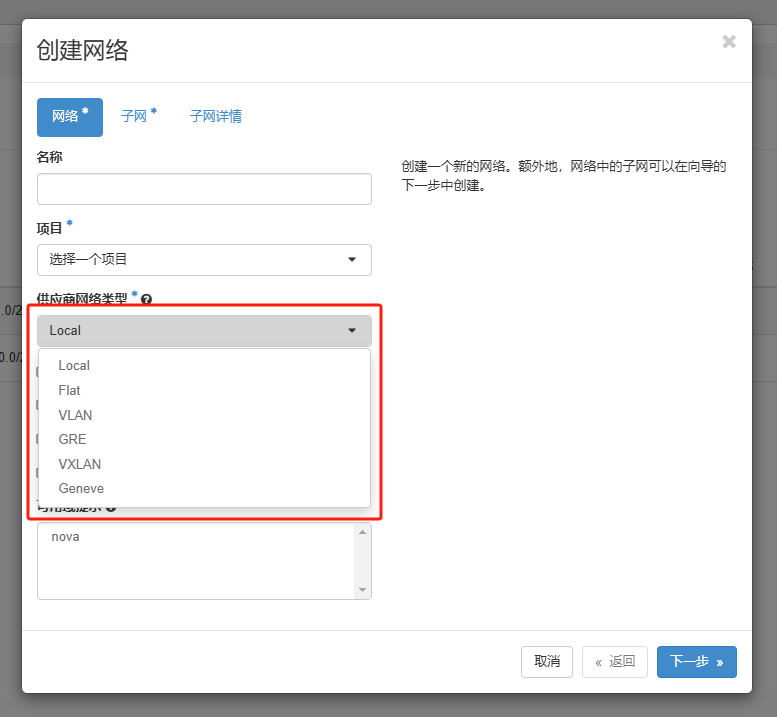

除了VXLAN的实现还有其他的吗?

有的兄弟,有的,VLAN、VXLAN、GRE、Flat、Geneve、Local,如图

它们的区别这里大体讲一下

- VLAN 我记得VLAN是有数量限制的,应该是4096个,如果采用VLAN的形式还需要在对应的网络设备接口中打

Trunk需要配合。 - VXLAN 它支持16M的网络数量,可以说是基本用不完,还可以跨数据中心,他就有一点缺点,他需要多一层数据封包,多了额外的计算资源的开销。

- GRE 这是个隧道协议,它可以跨多个物理网络,并且支持协议也多灵活度也高,但是他不提供数据加密,通常需要配合

IPsec,还有就是带宽和性能受到隧道负载的影响。 - Flat 不多说了 就是直连网络,而且没有网络隔离。

- Geneve 这个比VXLAN更加优秀,但是他比较新,还没有普及。

- Local 虚机想要通信必须在同一个主机并且同一个Local网络中,条件苛刻,但是比较安全。

要注意的是,在配置这些网络的时候请在配置中设置支持,配置参考/etc/neutron/plugins/ml2/ml2_conf.ini

type_drivers = flat,vlan,vxlan