前言

参考文档:https://docs.openstack.org/cinder/stein/install/

块存储通过cinder组件对接,他也分为控制节点和计算节点的区分。

控制节点

参考文档: https://docs.openstack.org/cinder/stein/install/cinder-controller-install-rdo.html

先去创库授权,在控制节点执行下面命令

mysql -u root -p

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'localhost' \

IDENTIFIED BY 'CINDER_DBPASS';

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' \

IDENTIFIED BY 'CINDER_DBPASS';在认证组件中创建用户、授权

./admin-openrc

openstack user create --domain default --password CINDER_PASS cinder

openstack role add --project service --user cinder admin创建服务实体

openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

openstack service create --name cinderv3 \

--description "OpenStack Block Storage" volumev3创建api访问入口

openstack endpoint create --region RegionOne \

volumev2 public http://controller:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev2 internal http://controller:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev2 admin http://controller:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 public http://controller:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 internal http://controller:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 admin http://controller:8776/v3/%\(project_id\)s安装软件包

yum install -y openstack-cinder备份配置文件

cp /etc/cinder/cinder.conf{,.bak}

egrep -v "^#|^$" /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf 修改/etc/cinder/cinder.conf 文件,主要是以下内容

[DEFAULT]

my_ip = 10.0.0.11

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp以cinder的身份填充数据库,这一步会有一个弃用信息,不用管。

su -s /bin/sh -c "cinder-manage db sync" cinder对接控制节点的计算服务,编辑nova的配置/etc/nova/nova.conf,主要是下面内容

[cinder]

os_region_name = RegionOne重启nova组件

systemctl restart openstack-nova-api.service启动cinder组件

systemctl enable --now openstack-cinder-api.service openstack-cinder-scheduler.service验证可以参考链接: https://docs.openstack.org/cinder/stein/install/cinder-verify.html

存储节点

参考文档: https://docs.openstack.org/cinder/stein/install/cinder-storage-install-rdo.html

官方推荐使用lvm+块存储的方式来做openstack的存储

这里在计算节点compute1安装第一个存储节点

yum install -y lvm2 device-mapper-persistent-data启动服务

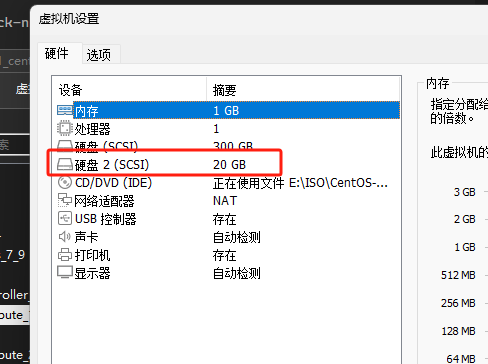

systemctl enable --now lvm2-lvmetad.service现在给这个节点添加一个硬盘

使用下面命令让主机热加载硬盘

echo '- - -' >/sys/class/scsi_host/host0/scan

echo '- - -' >/sys/class/scsi_host/host1/scan

echo '- - -' >/sys/class/scsi_host/host2/scan 开始创建存储卷

pvcreate /dev/sdb

vgcreate cinder-volumes /dev/sdb编辑lvm的配置文件/etc/lvm/lvm.conf然后参考文档: https://docs.openstack.org/cinder/stein/install/cinder-storage-install-rdo.html#prerequisites

说是建议不让lvm去扫描咱们使用的这个卷,如果不加可能会导致后期出现各种问题,还有就是如果系统本身也是lvm也需要加上,我这里系统用的就是lvm的方式所以修改的内容是

filter = [ "a/sda/", "a/sdb/", "r/.*/"]他还提醒其他计算节点如果采用的也是lvm也要过滤掉sda,如果是请跟随设置。

安装软件包

yum install -y openstack-cinder targetcli python-keystone备份

cp /etc/cinder/cinder.conf{,.bak}

egrep -v "^#|^$" /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf修改配置文件/etc/cinder/cinder.conf,主要是下面内容

[DEFAULT]

enabled_backends = lvm

glance_api_servers = http://controller:9292

# 当前ip

my_ip = 10.0.0.31

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = lioadm启动服务

systemctl enable --now openstack-cinder-volume.service target.service验证参考链接: https://docs.openstack.org/cinder/stein/install/cinder-verify.html

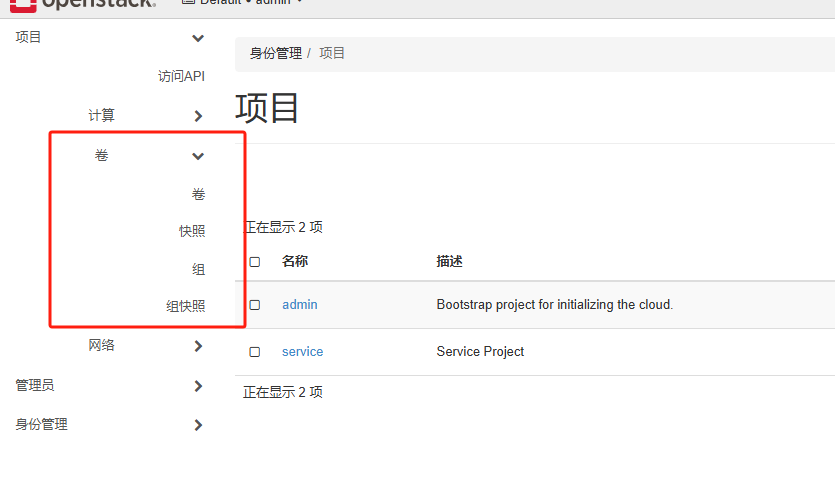

此时打开仪表盘会发现项目中多出了一个卷

其他存储

上面对接的是lvm的,下面开始对接nfs和ceph的

对接NFS

具体可以参考:

- https://docs.openstack.org/cinder/stein/drivers.html#nfsdriver

- https://www.cnblogs.com/jiawei2527/p/14028185.html

这里在compute1、2上对接NFS,然后NFS是跑在2上的,为什么要在两台主机上配置呢?是因为一个cinder的存储节点可以对接多种存储,这里为了做演示都展示一下。

这里先配置一下NFS,在compute2上,具体操作如下

yum -y install nfs-utils编辑/etc/exports配置文件,写入以下内容

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

/data2 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)创建目录以及启动并加载配置

[root@compute2 ~]# mkdir /data

[root@compute2 ~]# mkdir /data2

[root@compute2 ~]# systemctl enable rpcbind

[root@compute2 ~]# systemctl enable nfs

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.

[root@compute2 ~]# systemctl start rpcbind

[root@compute2 ~]# systemctl start nfs在compute1中执行命令showmount -e 10.0.0.32验证nfs

[root@compute1 ~]# showmount -e 10.0.0.32

Export list for 10.0.0.32:

/data2 10.0.0.0/24

/data 10.0.0.0/24在compute1的cinder上对接NFS存储,编辑配置文件/etc/cinder/cinder.conf,主要修改内容如下

[DEFAULT]

...

enabled_backends = lvm,nfs

...

[lvm]

...

volume_backend_name = lvm

...

[nfs]

volume_driver = cinder.volume.drivers.nfs.NfsDriver

nfs_shares_config = /etc/cinder/nfs_shares

volume_backend_name = nfs1编辑/etc/cinder/nfs_shares写入下面内容

10.0.0.32:/data重启服务

systemctl restart openstack-cinder-volume.service现在再去compute2去创建第二个存储节点,并且对接NFS

参考文档: https://docs.openstack.org/cinder/stein/install/cinder-storage-install-rdo.html

安装软件包

yum install -y device-mapper-persistent-data openstack-cinder targetcli python-keystone备份

cp /etc/cinder/cinder.conf{,.bak}

egrep -v "^#|^$" /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf编辑/etc/cinder/cinder.conf,主要内容如下

[DEFAULT]

glance_api_servers = http://controller:9292

enabled_backends = nfs

my_ip = 10.0.0.32

auth_strategy = keystone

transport_url = rabbit://openstack:RABBIT_PASS@controller

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[nfs]

volume_driver = cinder.volume.drivers.nfs.NfsDriver

nfs_shares_config = /etc/cinder/nfs_shares

volume_backend_name = nfs2编辑NFS连接文件/etc/cinder/nfs_shares,主要内容如下

10.0.0.32:/data2启动服务

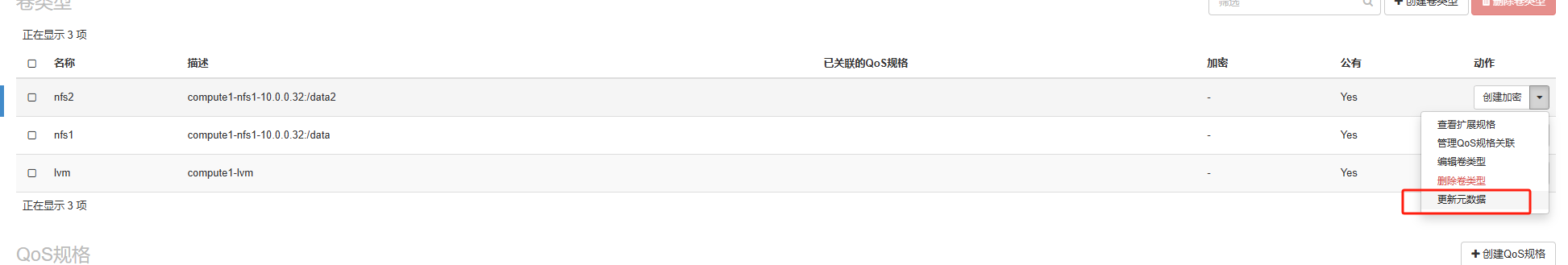

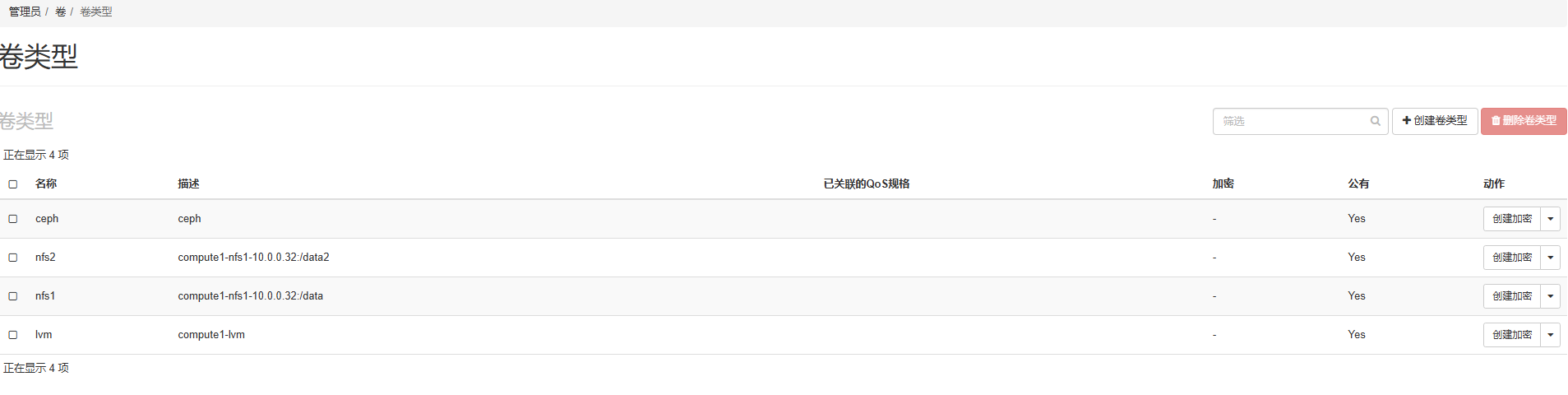

systemctl enable --now openstack-cinder-volume.service target.service这里算是配置了三个存储,一个是compute1上的lvm,nfs1,然后compute2上的一个nfs2,它们三个在openstack也可以做到区分,也建议做区分的使用,打开仪表盘的管理员-》卷-》卷类型中创建三个卷类型

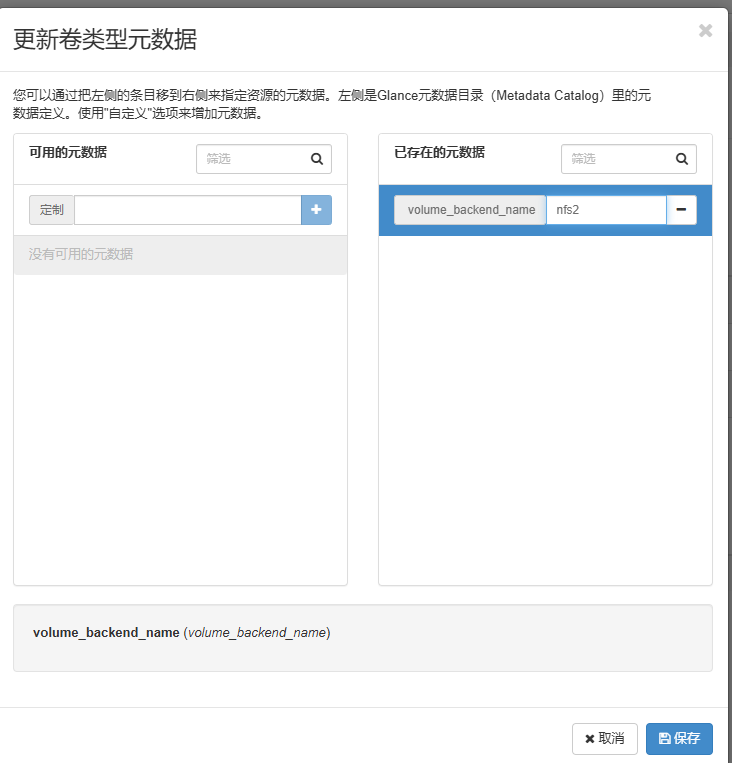

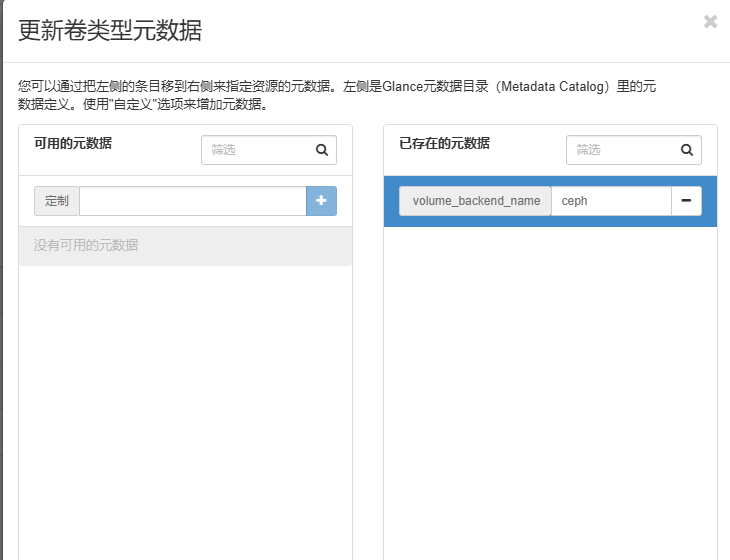

挨个更新它们的元数据

下面是nfs2的

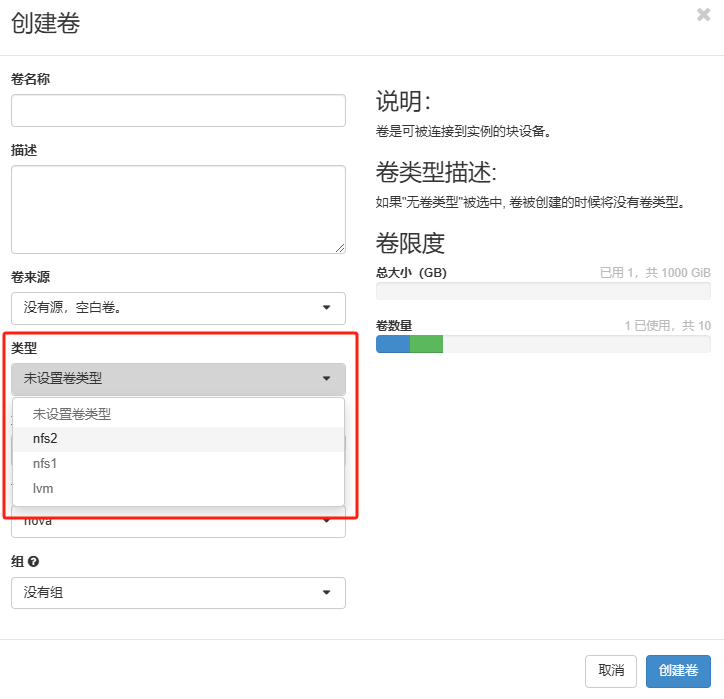

其他三个同理,这里就是给对接的卷做分类,通过配置的volume_backend_name选项做分类,三个都做好之后去计算-》卷-》卷中创建卷,此时就可以选择卷类型

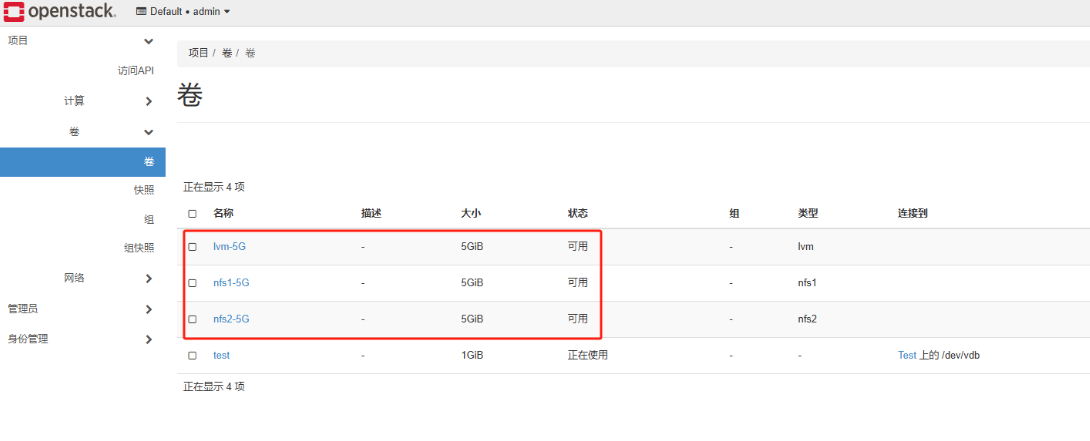

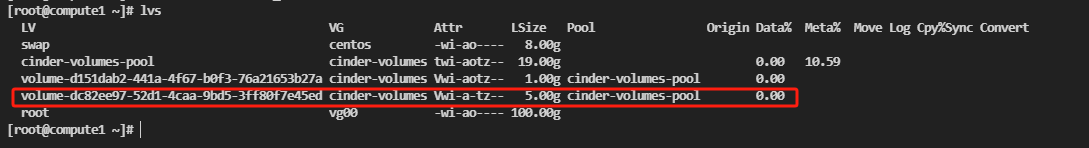

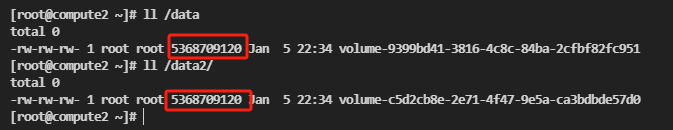

我这里挨个创建5G大小的卷,然后去对应提供存储的主机中查看卷情况

全部可用,去主机中查看

compute1主机的lvm

compute2主机的nfs1和nfs2

对接Ceph

我的Ceph机器的ip对应表如下,网络是互通的

# 网络配置

主机名 NAT网络

node1 10.0.0.150/24

node2 10.0.0.151/24

node3 10.0.0.152/24我ceph的版本是reef,比较新,我这里因为环境问题,操作风险较大,对接的时候建议是采用对应版本的软件,我这里是使用ceph的nautilus版本的客户端去对接reef的Ceph集群,在每一台compute主机上安装ceph工具,S版本集群默认自带的最高就是nautilus版本

yum -y install ceph-common之后在ceph的管理节点上创建一个位置用来存放cinder的数据,具体含义可以参考Ceph的文档

ceph osd pool create volumes 128之后再创建一个访问凭证

ceph auth get-or-create client.cinder mon 'allow r' osd 'allow class-read object_prefix rbd_children, allow rwx pool=volumes,allow rwx pool=vms, allow rx pool=images'执行返回结果如下

[client.cinder]

key = AQBAE4Jnr2FADxAA+5RG4nU8mOzkL3eHVzcdug==需要把这个内容复制到所有openstack节点的/etc/ceph/ceph.client.cinder.keyring位置,命令如下

cat >> /etc/ceph/ceph.client.cinder.keyring <<EOF

[client.cinder]

key = AQBAE4Jnr2FADxAA+5RG4nU8mOzkL3eHVzcdug==

EOF

# 给权限,两种命令都可以,第一条是需要在cinder组件装好才能用

chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring

chmod +r /etc/ceph/ceph.client.cinder.keyring在所有的compute主机中中配置secret,执行下面命令

# 生成一个uuid

uuidgen

# 我这里返回的是 ed4a34e1-590e-4cce-b35a-286a48d7b040 ,注意所有主机都必须是这个uuid

cat > secret.xml <<EOF

<secret ephemeral='no' private='no'>

<uuid>ed4a34e1-590e-4cce-b35a-286a48d7b040</uuid>

<usage type='ceph'>

<name>client.cinder secret</name>

</usage>

</secret>

EOF然后定义密钥文件和设置ceph的凭证,

virsh secret-define --file secret.xml

cat /etc/ceph/ceph.client.cinder.keyring|grep key|awk '{print $3}' > ./client.cinder.key

virsh secret-set-value --secret ed4a34e1-590e-4cce-b35a-286a48d7b040 --base64 $(cat ./client.cinder.key)之后在compute3把Ceph的/etc/ceph/ceph.conf文件拿过来,我这里的的内容如下

[global]

fsid = 81ecd502-cf4e-11ef-baaf-000c297bc826

mon_host = [v2:10.0.0.150:3300/0,v1:10.0.0.150:6789/0] [v2:10.0.0.151:3300/0,v1:10.0.0.151:6789/0] [v2:10.0.0.152:3300/0,v1:10.0.0.152:6789/0]它记录着一些连接地址什么的,需要把这个文件放到对接ceph的cinder的/etc/ceph/ceph.conf中,命令如下

cat > /etc/ceph/ceph.conf <<EOF

[global]

fsid = 81ecd502-cf4e-11ef-baaf-000c297bc826

mon_host = [v2:10.0.0.150:3300/0,v1:10.0.0.150:6789/0] [v2:10.0.0.151:3300/0,v1:10.0.0.151:6789/0] [v2:10.0.0.152:3300/0,v1:10.0.0.152:6789/0]

EOF在compute3中安装cinder

yum install -y openstack-cinder targetcli python-keystone备份

cp /etc/cinder/cinder.conf{,.bak}

egrep -v "^#|^$" /etc/cinder/cinder.conf.bak > /etc/cinder/cinder.conf修改配置文件/etc/cinder/cinder.conf,主要是下面内容

[DEFAULT]

glance_api_servers = http://controller:9292

my_ip = 10.0.0.33

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

enabled_backends = ceph

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

www_authenticate_uri = http://controller:5000

auth_url = http://controller:5000

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = CINDER_PASS

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rados_connect_timeout = -1

rbd_user = cinder

rbd_secret_uuid = ed4a34e1-590e-4cce-b35a-286a48d7b040

volume_backend_name = ceph

rbd_ceph_conf = /etc/ceph/ceph.conf和普通官网不一样的就是我这里没配lvm直接配的ceph,要注意的是my_ip、rbd_secret_uuid、volume_backend_name参数,需要根据自己情况去写。启动服务相关命令如下

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

systemctl restart openstack-cinder-volume.service这里在仪表中创建一个卷类型,使用volume_backend_name = ceph来设置元数据

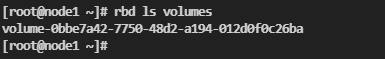

尝试创建卷试试

成功创建,去ceph中查看

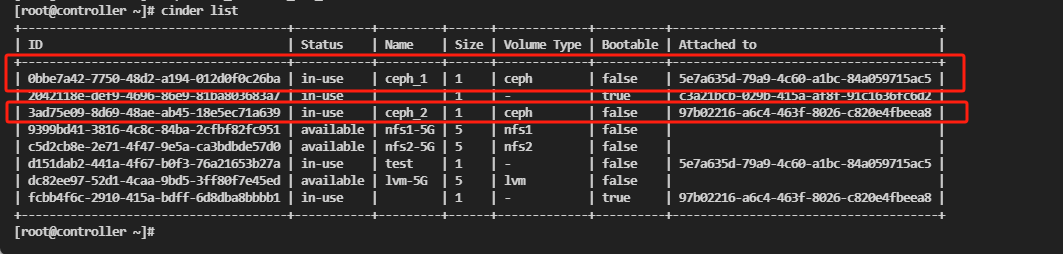

在cinder的控制主机中查看